Hand Gestures and Hand Tracking for Web Browsing

Introduction

This project explored the field of Touchless User Interface (TUI) and focuses on three main tasks: Hand gesture recognition, Hand tracking, and their application. The objective is to control computer and robotic car via hand gestures and motion without touching a keyboard, mouse or screen.

By implementing different Neural Networks, the team successfully create a touchless interface and explore the different dynamic of Human-computer interaction. You can find more information of the models and their results on our GitHub.

2020 is an unusual year, in the midst of COVID-19, touching different surfaces frequently might not be a good idea. Thus, contactless interfaces can be an area of future development. We hope this project will inspire the growth of other ideas and we hope to apply our knowledge and create applications that will add value to the society at large.

Hand Gesture recognition

We present a lightweight gesture recognition model that uses 3D convolutions in order to achieve near state-of-the-art performance on the 20bn-jester validation set.

C3D Superlite net has 6 convolutional layers, 4 max-pooling layers, 1 LSTM and 1 fully connected layer, followed by a softmax output layer. The training accuracy is 88% and the validation validation is 85%

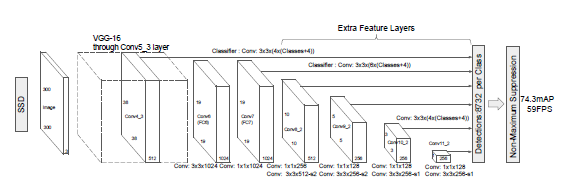

SSD_MobileNet_Hand_Tracker

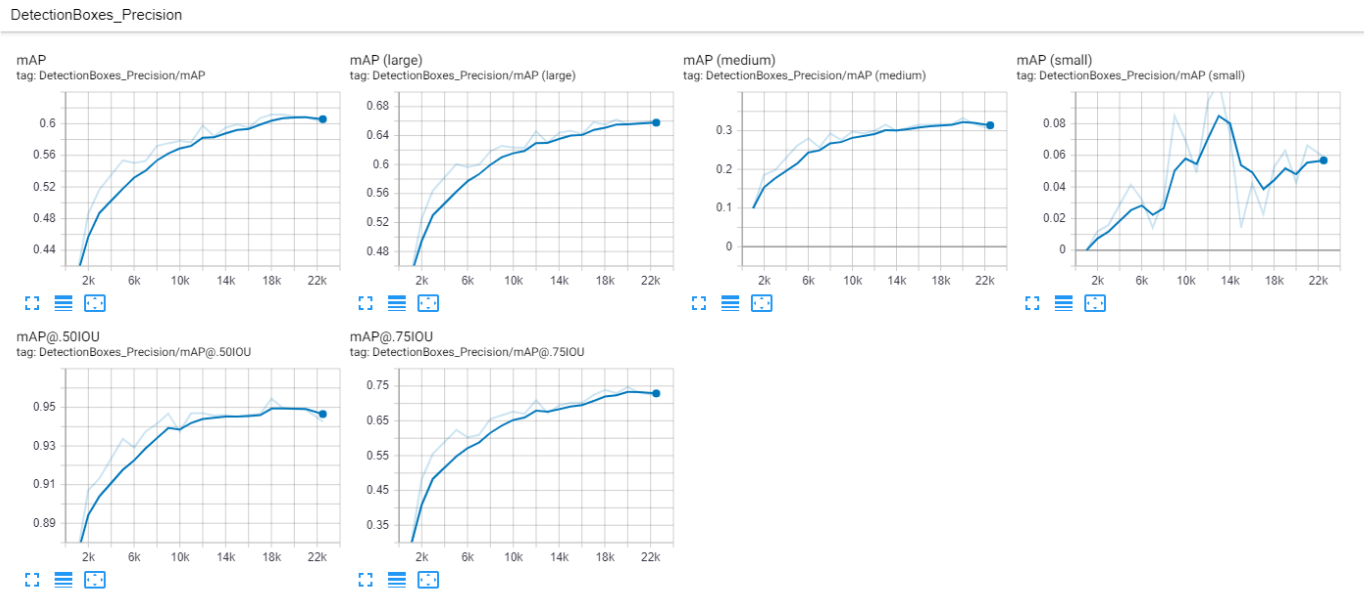

A hand tracker created using OpenCV and an SSD MobileNet v2 re-trained via transfer learning on the EgoHands Dataset.The goal was to utilize the EgoHands Dataset to perform transfer learning on the COCO SSD MobileNet v2, Tensorflow’s built-in object detection API.

![]()

The EgoHands Dataset, curated by Indiana University, came with a set of labelled annotations that were used to generate TFRecords files, which were required to train the SSD MobileNet. The trained frozen inference graph was then utilized in conjunction with a multithreading approach implemented in OpenCV to detect when a hand was present in a user’s webcam input, along with its location on the screen. In the future, this may be implemented to allow the user to interact with their computer’s interface, performing actions such as clicking, dragging and dropping, and even playing simple games. The final model was trained on 22500 iterations.

Code was modified based on the scripts created by Victor Dibia:

- Note that the original scripts were written in Tensorflow 1.x. Alterations were made accordingly and are present in this repo’s code. However, when using Tensorflow 1.x, include tensorflow.compat.v1 in place of tensorflow.

Reference

The EgoHands Dataset can be found here (though downloading it is not required):

Harrison @pythonprogramming.net’s tutorial(https://pythonprogramming.net/creating-tfrecord-files-tensorflow-object-detection-api-tutorial/) on creating the required TFRecords files.

Training code was modified based on the script created by Matus Tanonwong:

Original Paper Detailing Single Shot Detectors (SSDs) by Liu et al.

Special thanks to Kathy Zhuang for being my partner in this project.